Liberal democracies needs a new compute stack - Part 4

Isolation is all you need

“Everything should be built top-down, except the first time.” - Alan Perlis

This succinct statement explains how we would deal with the complex adaptive domain and transition our understanding to the complicated domain of the Cynefin framework. Allowing us to eventually optimize solutions to problems that initially is riddled with both known and unknown unknowns. In part four I will look at the importance of isolation to shift the cyber battlefields in favour of the defenders. It is obviously not all we need to build secure systems, but in this age of AI the reference to "Attention is all you need" was just too tempting.

A short recap of what we have covered so far

Part one: Motivate the need for more secure and less complex online systems. A need-to-have if operated outside China or California because of limited resources. This may include autonomous systems and online services.

Part two: Identify a possible strategy for future defensible information systems that significantly shifts the cyber battlefield in favour of the defender by isolating failure domains. The strategy is designed to allow for an incremental approach, but still requires some risk taking in terms of first building up software runtimes that may suffer some performance overhead and software based isolation mechanisms, and later the need to invest in hardware/software co-designs that eliminates these trade-offs but also breaks backward compatibility with software that has not been ported to these new Webassembly and actor model based systems.

Part three: Delved into the problem at hand and identified the incentive structures that led us to where we are today. Further we looked at the need to establish low overhead communication and credible isolation through means of hardware mechanisms and briefly introduced a model of surfaces, gaps and interfaces that will be important when designing defensible systems. Lastly the ability to sustain system capabilities over time by ensuring current and future sovereign control. Something European countries have become more aware of, with fear of backdoors in Chinese 5G infrastructure.

While writing part four an additional credible commitment problem has arisen from Whitehouse 4D chess games; Uncertainty with regards to F-35 maintenance, including assurance of future ability to obtain keys to arm weapons and updates that may be necessary to adapt to electronic warfare and maintain systems compatibility. In short it is important to ensure sustainability of capabilities we care to obtain, or “strategic autonomy” if you will.

“There’s no silver bullet solution with cyber security; a layered defence is the only viable defence.” - James Scott, Institute for Critical Infrastructure Technology

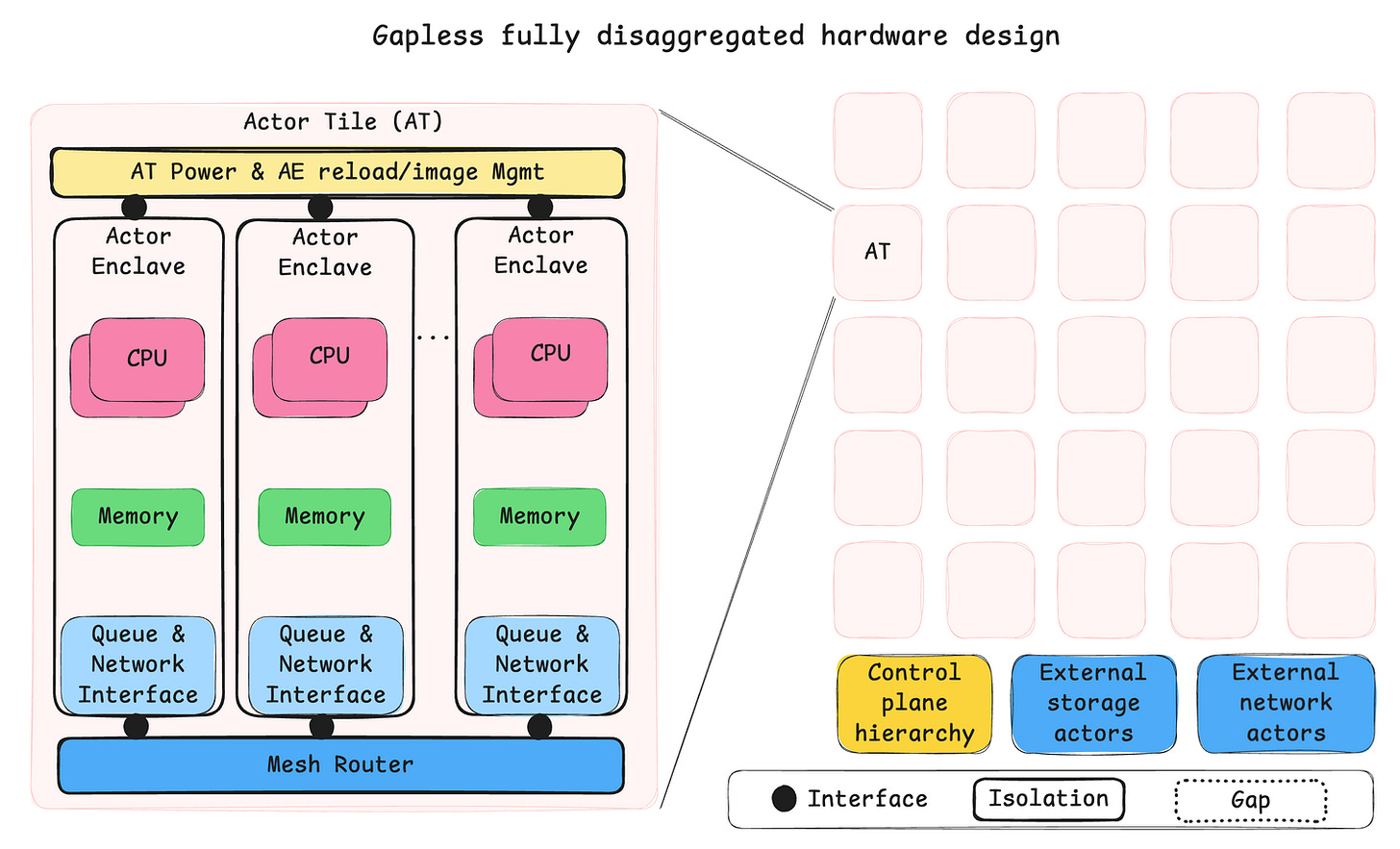

In part four I will try to expand on what we mean by isolation but first let us take a brief look at what an end-game hardware architecture could look like. This is a total paper tiger but hopefully also a high-level design to visualize a desired state. The hardware design has two primary goals: low overhead communication and credible isolation (later to be renamed durable isolation). It may look fancy at first glance, but it is just a computer cluster on a chip, that draws some inspiration form isolation mechanisms like Apple’s secure enclave and scale out strategies like Tenstorrent Grayskull.

I argue that this design is doable, fit to purpose and importantly emerging as usable now that we see polyglot actor frameworks like the aforementioned Wasmcloud system. Previously this type of design would have been much harder to program. Hardware designs with similar characteristics has been and is around today. The Tandem Nonstop has been mentioned, but also the IBM Cell architecture deployed in Sony PlayStation 3 has some resemblance and if you search for images of Tenstorrent Grayskull you will see the obvious inspiration for the sketch above.

The big insight is that using the actor model allows for fully disaggregated hardware design and arbitrary scale. This breaks the current chains of the scale up von Neumann architectures that perhaps exists almost purely because of the incentives created by backwards compatibility.

Moore’s law largely marches on, but importantly without the help of Dennard scaling, which causes heat density to become the limiting factor. This means that the OS is no longer in sole control of how fast a task will run. Instead, the power management in our systems has the final say, and the GPUs has already taken this into account, also alleviating the OS from the tedious task to schedule thousands of threads on thousands of compute entities. In the design above the OS takes a back seat with regards to how fast a task runs. The OS could instruct the control plane about what to run where and at what priority, but the tasks run in parallel somewhat like what a GPU would do without the direct interference of OS thread scheduling.

Notes on isolation

“You keep using that word. I don´t think it means what you think it means” - William Goldman, The Princess Bride

As much as I really don´t want to take a swing at actor frameworks, because I believe very strongly in the strategy they are following, we must set the record straight here. Software defined isolation on a von Neumann machine has two major issues.

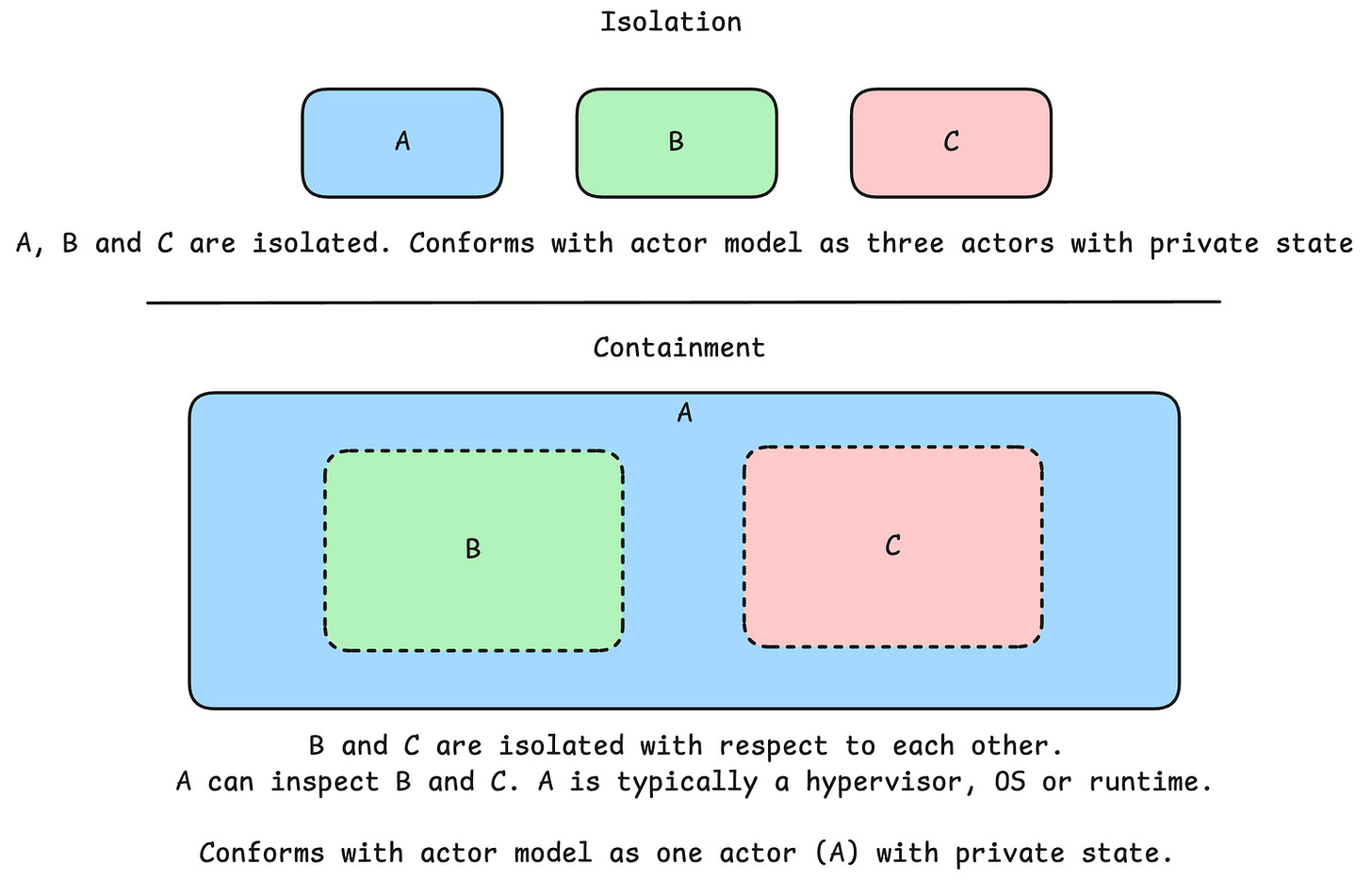

Number one, isolation goes both ways. That means both privacy and containment. If there is no privacy, I will refer to it as containment.

Number two, isolation must be credible in the future. That means it must not be able to wither during the lifespan of the system. I will refer to the this as “Durable isolation” and the counter example will be “Pliable containment”

Carl Hewitt laid out the definition of the actor model like this.

An Actor is a computational entity that, in response to a message it receives, can concurrently:

- send a finite number of messages to other Actors;

- create a finite number of new Actors;

- designate the behaviour to be used for the next message it receives.

The definition is extremely terse. This is economy of mechanism in practise and take note that there is absolutely no way to inspect the interior of an actor. You may only send it messages. The actor has private state.

ISOLATION: The ability to keep multiple instances of software separated so that each instance only sees and can affect itself. – NIST Glossary

Consider three pieces of software A, B and C.

Assume that A gets compromised and modified to gain functionality like inspecting B, and C if it couldn´t already or to allow B and C to interact with each other’s internal state directly. The software-based containment capability is pliable, not durable. Software based actor frameworks can´t really guarantee what the actor model prescribes. They have “happy-path-actors”, it is fine, until it isn´t.

The emperor’s new clothes

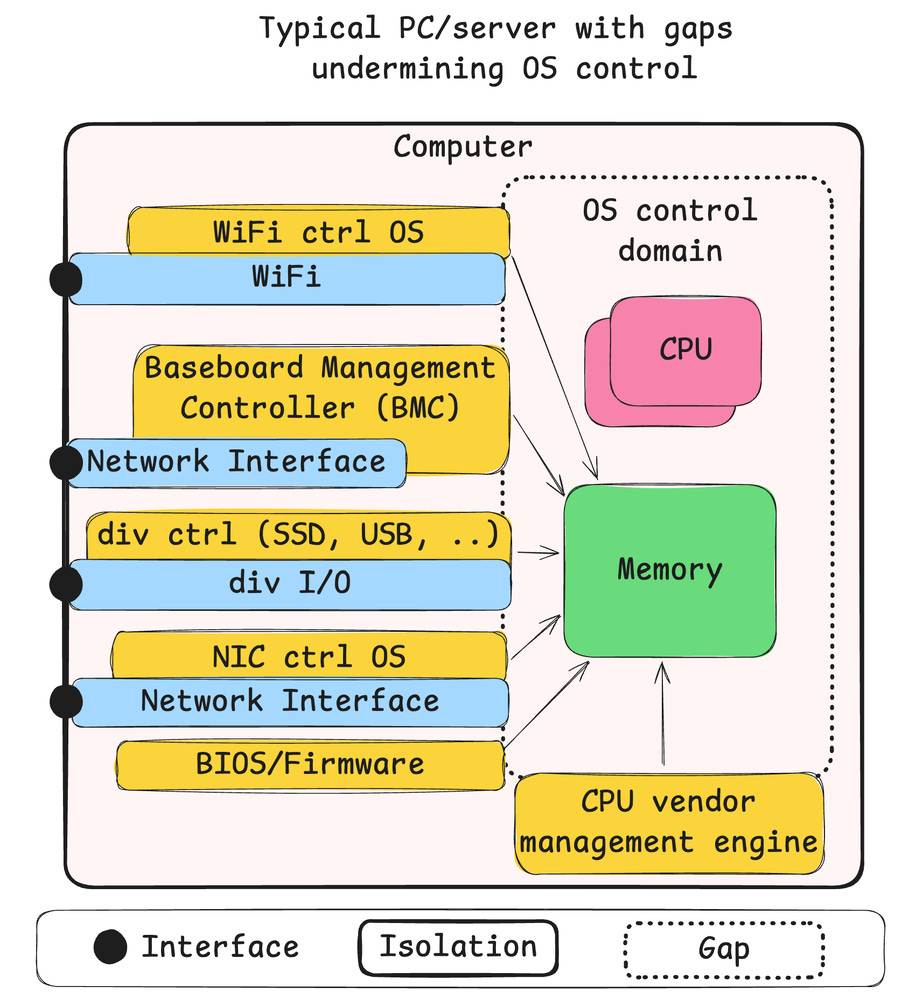

But it gets worse, a lot worse. Consider a typical PC or PC derived server. There really is only one huge isolated entity and it contains a lot of black boxes. Let us expand on how we think about defending compute devices. There are surfaces, gaps and interfaces. Surfaces are prepared defensive structures designed to stop anything within the scope of the system. Gaps are places we haven´t prepared any structures so anyone can get in if they find it. It may be an oversight, a hidden backdoor, or simply risk acceptance. Interfaces are the gates of the castle. Your interaction depends on your privileges. But then we have designs like this.

The PC can be thought of as a single actor with multiple compute elements, many of which are completely out of your and OS control. This is perhaps a reason why coming up with a reasonable model for computer security tends to fail. There simply is no way you should be able to reason about the security guarantees of systems designed like this, because there obviously aren’t any.

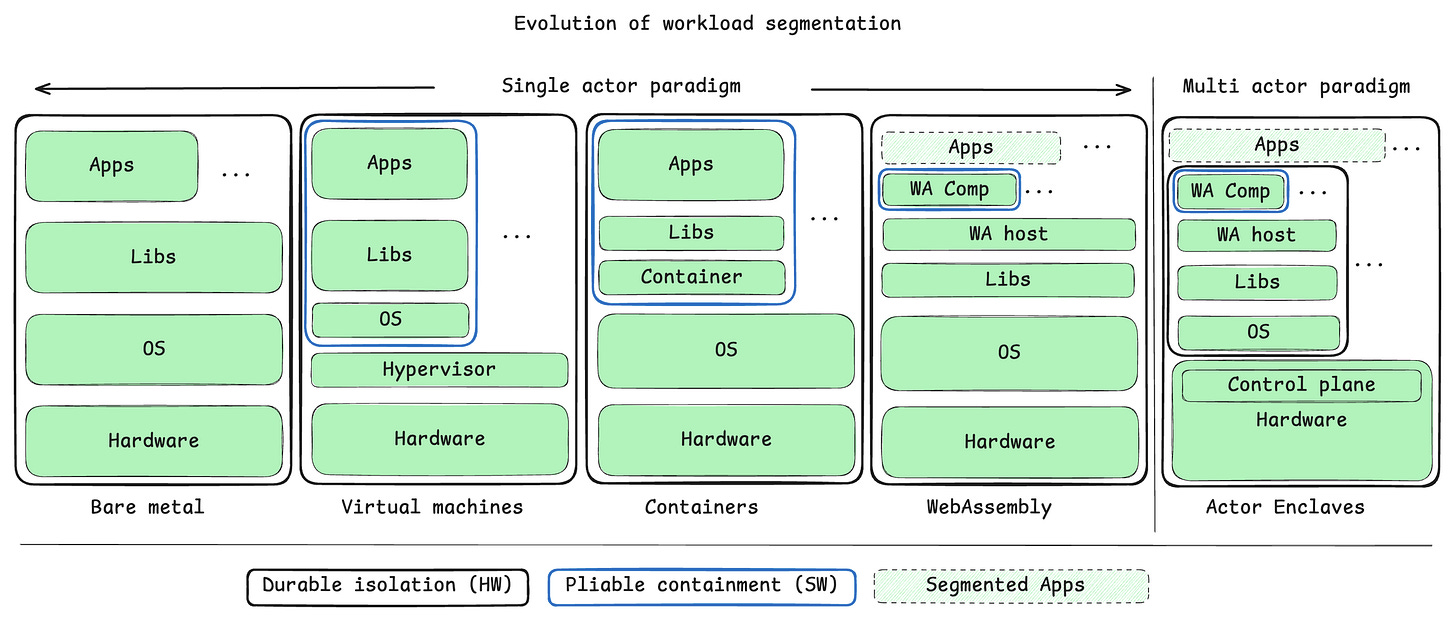

This all leads to the following perspective on the evolution of workload segmentation where software mechanisms contribute “pliable containment”, and hardware contribute “durable isolation”. Of course, software isn´t always compromised, but we are considering guaranteed sustainability of capabilities here. This does not mean that pliable containment is useless, but it is a risk. I do realise that the following model takes a swing at every actor framework out there by grouping all of them in the “single actor paradigm”. The goal is not to claim that they are wrong, but to show that they could do so much better with a scale out von Neumann architecture than they are with the current crop of scale up von Neumann architectures.

Note how little changes in the last step from “Webassembly” to “Actor Enclaves”. This is the importance of the strategic contract from part two. Massive rebuild of the underlying hardware and OS construct, but no change to the application.

The only way to scale arbitrarily large is to share nothing. Any kind of shared resource will eventually become a bottleneck. By embracing a contract that mandates polyglot, actor model the software industry would really be enabling the hardware industry to free themselves from the von Neumann bottleneck and to deliver arbitrary homogeneous scaling and durable isolation mechanisms back to the software industry, without the software industry giving up on its freedom to innovate new programming concepts.

The theme of this blog series can be seen as searching for enabling constraints. So here are some final thoughts as I round off part four.

There is a danger of the “maximum capability strategy” we find in the design goals of technologies like scale up von Neumann, shared memory, C, C++, Linux/Unix and Git to mention a few. Adversaries love to "live off the land", don’t leave sharp objects lying around. It is perhaps time for a new era of enabling constraints where concepts like scale out von Neumann, Webassembly, actors, capability-based security and tagged unions my favourite enabling constraint in Rust-lang, gets a place in the sun.

Part four became much more work than anticipated. Partly because it cannibalised some topics meant for the promised part five and six. They will be somewhat postponed.